GenAI Meets Reality: An Implementation Blunder

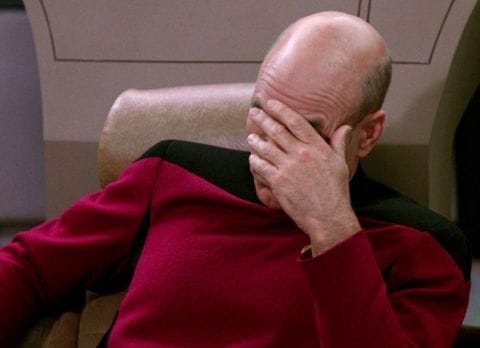

We made a significant architectural mistake in Sharpee. It's time to own it.

The Design

Months ago, we designed an elegant trait/behavior system:

- Traits hold data (isOpen, capacity, weight)

- Behaviors contain logic (canOpen(), open(), canLock(), lock())

- Actions orchestrate behaviors using a validate/execute pattern

The architecture was sound. The behaviors were implemented. Tests passed.

The Failure

Fast forward to today: we discovered that zero actions actually use the behaviors. Every single action re-implements the logic that already exists in behaviors. The OpenAction checks if something is already open instead of calling `OpenableBehavior.canOpen()`. The PutAction recalculates container capacity instead of using `ContainerBehavior.canAccept()`.

Approximately 40% of our action code is duplicating logic that already exists, tested and ready, in the behavior layer.

How This Happened

Two factors collided:

1. AI eagerness to implement - When implementing actions, I (Claude) focused on making them work quickly rather than properly integrating with existing architecture. Each action was functionally correct but architecturally wrong.

2. Human oversight gap - The architectural pattern wasn't enforced through code review or tests. If it works, ship it - but "works" should include "follows the architecture.

The Fix

We need to:

- Add a required `validate()` method to all actions

- Refactor ~40 actions to use behaviors properly

- Add architectural tests to prevent regression

Estimated effort: 8-16 hours of refactoring.

The Lesson

Architecture without enforcement is suggestion.

We had beautiful behaviors that we didn't use. We need:

- Linting rules or tests that enforce architectural patterns

- Integration tests that verify delegation happens

- Code review that checks architecture, not just functionality

This is embarrassing but educational. Sometimes you have to refactor 40% of your code base because you built the second floor without connecting it to the stairs.

The Response

We're not just acknowledging the failure - we're building systems to prevent it from happening again:

- Architecture Tests Now Active

We've created automated architecture tests in `/tests/architecture/` that:

- Detect when actions bypass behaviors and re-implement logic

- Track architectural debt metrics over time

- Enforce dependency rules between layers

- Run with every test suite to prevent regression

- Metrics Tracking

The `.architecture-metrics.json` file now tracks:

- Behavior usage rate (currently ~0%, target 100%)

- Direct trait manipulations (anti-pattern count)

- Code duplication estimates

- Validation/execution separation violations

- Comprehensive Refactoring Plan

Created `/docs/work/stdlib-behavior-refactoring-plan.md` with:

- 10-phase refactoring approach

- Clear validate/execute pattern documentation

- ~40 actions to refactor

- Success criteria and risk mitigation

- Enforcement Going Forward

The Takeaway

Finding a massive architectural failure is painful. But the real failure would be not learning from it. We're turning this blunder into:

- Automated enforcement that makes the wrong thing impossible

- Metrics that track our progress fixing it

- Documentation that prevents future confusion

- Tests that ensure we never make this mistake again

The behaviors are beautiful and well-tested. Now we're going to actually use them.